In the past few years cybersecurity teams have moved beyond traditional vulnerability severity ratings from CVSS, adopting EPSS and the CISA KEV list to focus on their most impactful vulnerabilities. This has been an easy move decision for teams to adopt as less than 5% of vulnerabilities are ever actually exploited in the wild. Because of that, frameworks like EPSS have skyrocketed in popularity. And with MITRE now tracking almost 300,000 vulnerabilities, this intense focus on exploitability has been the best way for teams to effectively manage vulnerabilities. However, there's always room for improvement, and NIST is now looking to update yet again how we measure vulnerability severity with its new LEV framework.

Today's approach of prioritizing which vulnerabilities to fix first combines two types of intelligence, 1.) predictive forecasting and 2.) confirmed, real-world evidence of exploitation. This strategy is driven by two data sources, in EPSS and the CISA Known Exploited Vulnerabilities (KEV) catalog:

This two-part strategy has been a leap forward from the legacy approach of using static CVSS scores alone. For the first time, it has allowed security teams to layer predictive forecasting over confirmed, real-world exploit data. But even with these advances, it's worth exploring whether this approach might leave some potential gaps.

Although EPSS and KEV have been driving modern vulnerability management programs, they play different roles with CISA KEV being a great first place to check for the most critical vulnerabilities being targeted by attackers (the CISA KEV list is limited to only around 1,200 vulnerabilities as of mid-2025) and EPSS being better effective for driving a an overall vulnerability management program on a day-to-day operational basis.

If there has been one philosophical trade-off in EPSS’s approach, it’s been its heavy focus on what will happen, weighting it more heavily than what has already happened. It’s an opinionated stance, not necessarily a flaw, but it can raise a question of what might be missed by de-emphasizing vulnerabilities that have been consistently exploited in the most recent weeks and months. This is where NIST LEV comes in.

The top three vulnerability management players, Tenable, Qualys, and Rapid7, have their own severity ratings that factor in exploitability. Their biggest problem - they are all a black box. Go ahead and ask any of the three vendors how they came up with this score, and they will tell you that it's part of their "magic sauce" and they will offer you zero transparency.

In May of this year, NIST took a major step to change that by releasing the Likely Exploited Vulnerabilities: A Proposed Metric for Vulnerability Exploitation Probability white paper giving industry more structure and transparency into how modern severity levels can be calculated.

The second half of their whitepaper gets pretty heavy into math, but you don't need to get lost in equations to understand its core concept. At its core LEV is designed to answer a potential gap in EPSS, what is the probability that a vulnerability has already been exploited, and how should that be weighed against future predictions? LEV does this by re-analyzing the historical stream of daily EPSS scores. It takes an opinionated approach, looking more strategically at historical exploitation data, which some can argue EPSS can deemphasize in comparison to LEV. This gives security practitioners a new structure view

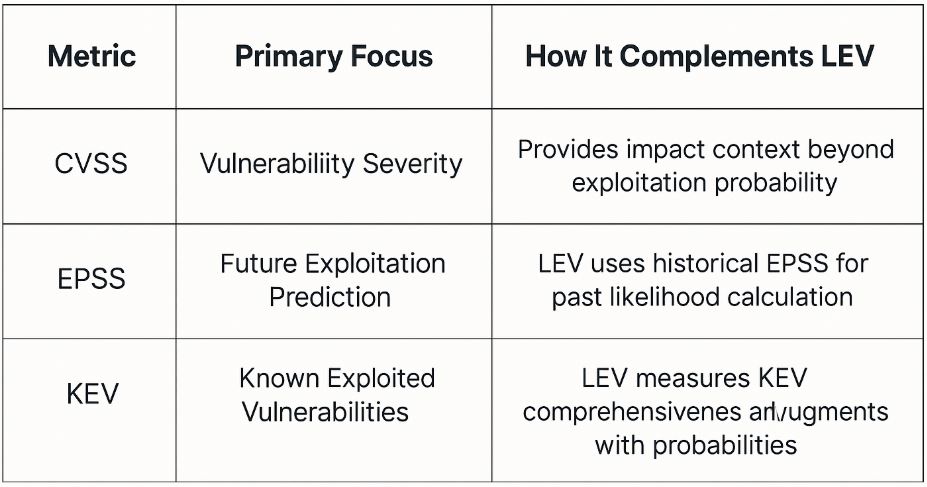

And understanding how an integrated approach can be advantageous. A quick comparison of how CVSS, EPSS, and KEV may compliment LEV:

To build a practical plan, we must first be clear about the scale of change we're talking about. The shift security teams made from relying on static CVSS scores to adopting the predictive approach of EPSS was a "0 to 1" upgrade. It was a significant leap that fundamentally changed how we manage risk, allowing teams to cut through the noise and focus on the small subset of vulnerabilities that are actually likely to be exploited.

The introduction of NIST's LEV framework is not another revolution. It is a "1 to 1.1" Iteration. It's an incremental improvement that offers a way to fine-tune an already highly impactful, EPSS-driven program. This distinction is critical because it informs how we should structure our priorities. The most significant gains have already been made by moving to EPSS. LEV Continues to build on that.

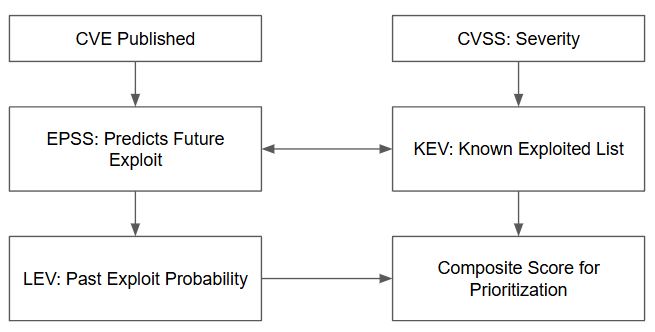

Could all three work together? To see how these different pieces fit together, the following workflow maps out How EPSS, KEV, and LEV can work in concert together:

Although LEV is far from the leap several years ago where many teams were continuing to use CVSS scoring for vulnerability severity ratings, it has a strong chance at being heavily integrated in security programs moving forward.

The move from static CVSS severity ratings to measuring exploit probability with EPSS was a leap forward for vulnerability management teams, giving them to focus on the future and prioritize what is most likely to be exploited next. The introduction of NIST's new LEV framework doesn't challenge this, instead it complements it, giving practitioners a structured way to better integrate vulnerability history.

Ultimately, the decision isn't about choosing between EPSS and LEV since LEV incorporates historical EPSS data in its model. It’s instead about leveraging prediction as the core mission and historical analysis as a valuable refinement.

Schedule a consultation with us today and take the first step towards securing your digital future.